The New Ideas Needed for AGI

The strength of LLMs is also their weakness. A truly general intelligence may require a certainty in thinking and reasoning that cannot be found in language.

“Scale is not all you need. New ideas are needed.” And not just new ideas, but new benchmarks. This was the succinct contrarian perspective of ARC Prize and Zapier co-founder Mike Knoop when we talked to him on the most recent Training Data podcast episode. ARC Prize is based on a benchmark created by Knoop’s co-founder, François Chollet back in 2019 that has stubbornly resisted solving.

The current AI moment is all about the unreasonable effectiveness of scaling the transformer architecture and LLMs, leading to the toppling of one “human-level” benchmark after another. What’s different about ARC-AGI? Why are current methods ineffective against it? What exactly are the new ideas needed?

To start with, as Chollet claims, the ARC-AGI benchmark is hard in a way many other AI benchmarks are not. It was designed to be resistant to memorization despite the amount of data and compute thrown at it. It is easy for humans, but hard for AIs. In fact, its solution would mean that the system was able to learn a novel task from a very small amount of data—precisely the opposite of what is now possible.

The puzzle at the heart of the problem

Founders in the AI application space can see where customers are deploying LLM-based solutions—and more critically where they are not. It is no surprise that customer service is the leading outbound use case. It is a labor-intensive undertaking that has previously been automated badly, with notoriously low NPS scores. And there’s already a human in the loop, but with LLMs that engagement is limited to only escalated cases.

There are also many internal use cases that are able to save workers significant time even if they fail some of the time. But the big bottleneck to more widespread adoption of AI products is the lack of trust stemming from the unconstrained nature of LLMs. Workflow automation software, like Knoop’s Zapier, is formally verifiable to do what it says on the tin. LLMs, by contrast, can perform off-label fabrications (unpredictably) unless constrained by the structure of a robust cognitive architecture.

Knoop is a big admirer of Rich Sutton’s prescient essay, The Bitter Lesson (as is everyone we’ve talked to on the podcast). He points out that although Sutton is even more correct about the effectiveness of scaling learning and search today than he was five years ago, the one aspect of AI that has not benefited yet from scaling is architecture search itself. Indeed, the solutions that have been most effective so far against the ARC-AGI benchmark all explore architecture space in an automated and dynamic way, rather than hand-crafting static architectures as most researchers continue to do.

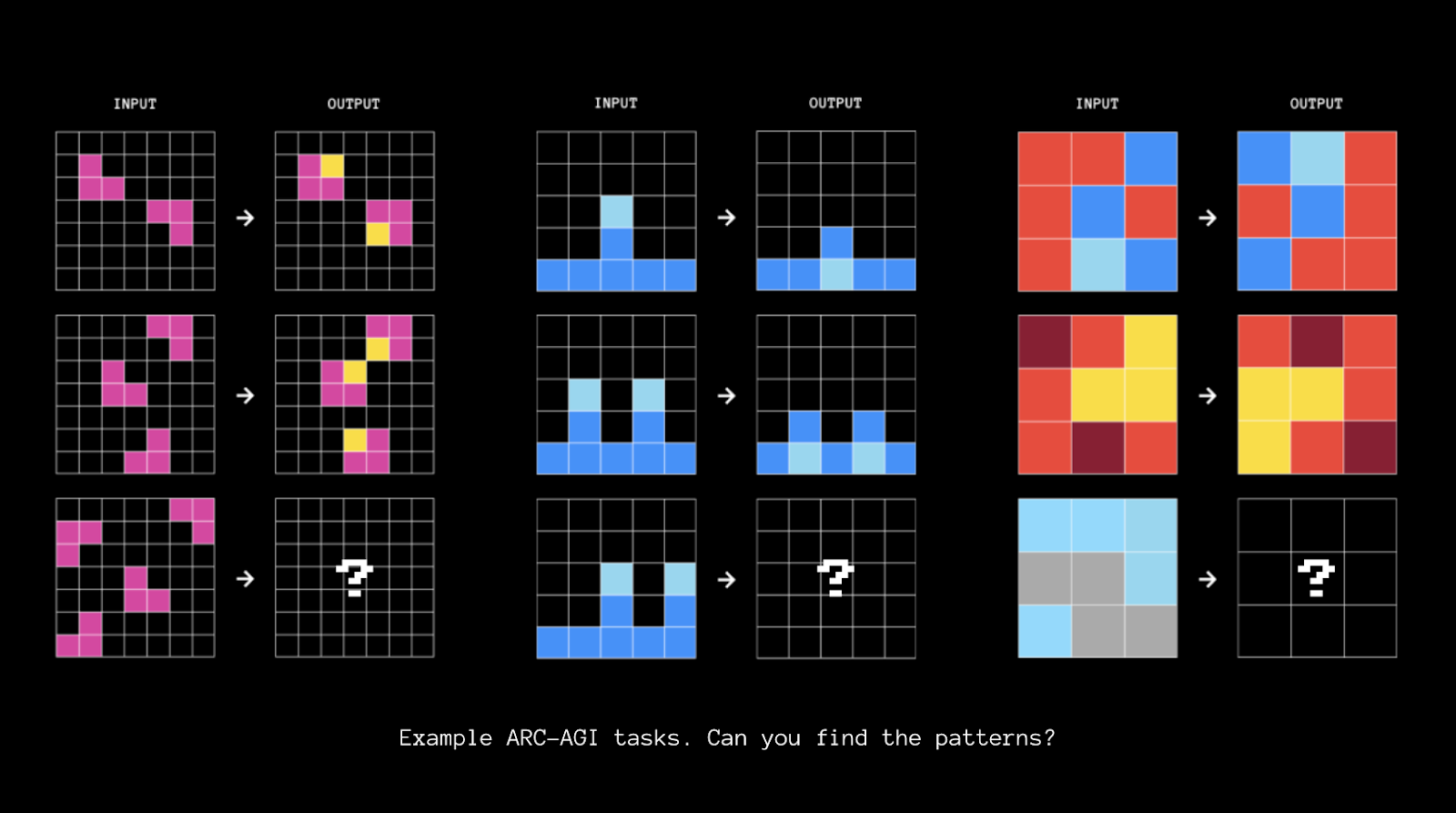

Chollet defines AGI as the efficiency of acquiring new skills. The ARC-AGI benchmark itself is a set of 400 matrix puzzles that unambiguously describe patterns that humans are able to match intuitively based on a core set of knowledge priors, like symmetry and rotation, that machines have had difficulty learning precisely and reliably. Chollet designed the tasks so that a human-level score across the test set would mean that the system could efficiently learn a novel task in this highly variable but internally consistent puzzle space.

What this suggests (and what contemporary neuroscience is proving) is that there are non-linguistic aspects to thinking and reasoning that need to be represented with something other than language. All the words in the world wouldn’t provide sufficient training data to actually learn these priors. Solving ARC would seem to require different tokens learned in a different way from the likes of GPT-4o and Claude Sonnet.

Transforming the transformers

Knoop also observes that the transformer architecture is formally capable of representing very deep deductive reasoning chains with 100% accuracy, but what’s missing is the proper learning algorithm. The great progress with LLMs in the past few years—along with the explosion of available compute—may turn out to be necessary but not sufficient for AGI.

Benchmarks have a long history in AI, going back to the Turing test, which took 50 years to beat and yet proved nothing about the true nature of intelligence. The ARC-AGI benchmark provides a clear contrast to a previous challenge of intelligence called the Winograd Schema. Named after a pair of ambiguous sentences formulated by Stanford professor Terry Winograd (who, by the way, taught Google founders Sergey Brin and Larry Page), the schema was proposed in 2012 by Hector Levesque as an alternative to the Turing test. Although Winograd claimed in 1972 that such sentences would be impossible for computers to correctly disambiguate without “understanding” real world context, by 2019 a BERT language model was sufficiently powerful to claim 90% accuracy and the benchmark was considered solved.

ARC, by contrast, is constructed to model deterministic certainty. Each puzzle can be solved, and can be solved only one way. To learn such a set of puzzles is to learn a sense of invariance in the way that 1 + 1 = 2 or that a note is a pitch perfect C. This precision is exactly what’s missing in the intelligence generated by LLMs.

Knoop describes LLMs as “effectively doing very high dimensional memorization.” The power comes from running inference on the resulting models to generate high-dimensional output with the appearance of veracity. You can think of LLMs as looms on which you can weave digital media to order on demand.

The models that might win ARC would generate artifacts more solid and precise than these language looms—perhaps forges? What AGI requires is an armature flexible enough to learn new things but certain enough to be reliable about what it has learned.

Outsiders wanted

What we want AI to learn—what we hope AGI will be—is obviously way beyond the two dimensional puzzles contained in the ARC test set. It would be easy to dismiss this benchmark as another toy problem without real world application. But the style of learning that a winning system implements will most likely be something new, something that goes beyond the scaling of data and compute that all of the current frontier labs are pursuing. Such an AGI would also likely be safer and slower to roll out, less a singularity than a continuation of human stair step technological progress.

An important part of the ARC Prize is promoting the open sourcing of solutions to the ARC-AGI benchmark. By calling attention to the problem, and offering more than $1M for a better than 85% solution, Knoop and Chollet hope to reshape the research space back towards the kinds of open collaboration that led to our current breakthroughs.

The big labs are also clearly exploring new ideas, but perhaps more as a side quest than a main quest. From our experience with founders we know that the singular focus of, as Factory’s Matan Grinberg says, being “more obsessed than everyone else working in this space” is what leads to breakthroughs.

Knoop and Chollet’s quest to advance progress on an unmoved benchmark has echoes of Patrick Collison’s calls for increasing the velocity of science through developing more agile and interdisciplinary institutions. When we asked Knoop who he thought would provide the winning solution to ARC Prize he said, “I think it’s going to come from an outsider, somebody who just thinks a little bit differently or has a different set of life experience, or they’re able to cross pollinate a couple of really important ideas across fields.”