Last January, we compared ChatGPT to AI’s “Big Bang” and predicted that 2024 would be AI’s “primordial soup” year. The AI ecosystem was abounding with new ideas and potential energy. It was a ripe moment for new entrepreneurs. “There is much potential in the air, and yet it is still amorphous,” we wrote at the time. “Vision is required to convert it into something real, tangible and, ultimately, impactful.”

Today, the AI ecosystem has hardened. There are now five “finalists” in the race for biggest model. Nvidia’s highly anticipated Blackwell chip is shipping this month. Data centers, many of which were planned in early 2024, are entering full-on build mode. TSMC is building new fab capacity and Broadcom is working on custom AI chips: the entire supply chain has shifted into high gear. In every industry from healthcare to law to insurance, new AI initiatives are kicking off.

If 2024 was the primordial soup year for AI, the building blocks are now firmly in place. AI’s potential is now congealing into something real and tangible—embodied by physical data centers that are rising up all across America from Salem, PA to Round Rock, TX to Mount Pleasant, WI. If 2024 was about new ideas abounding, 2025 will be about sifting through those ideas to see which really work.

Below, we offer three predictions for the year ahead:

1. LLM providers have evolved distinct superpowers—this should lead to incremental differentiation and a contested pecking order in 2025

In 2024, the big model race was all about reaching parity with GPT-4. Five companies achieved this objective (or got close enough) and thus became “finalists:” Microsoft/OpenAI, Amazon/Anthropic, Google, Meta and xAI. Others dropped out of the race, most notably, Inflection, Adept and Character.

To get to GPT-4 quality, these companies ran roughly the same playbook: Collect as much data as possible, train on as many GPUs as possible and refine the pre-training/post-training architecture to maximize performance. With talent moving fluidly across organizations in 2024, few trade secrets remain.

As each player prepares for the next round of LLM scaling—which will likely involve another 10x increase in compute scale—the labs are evolving differentiated superpowers. They have “chosen their weapons,” so to speak, for the battle ahead. In 2025, these distinct strategies should lead to disparate outcomes, with some players pulling ahead and others falling behind.

- Google – Vertical Integration: Google’s advantage going into 2025 is vertical integration. Google is the only player with its own first-class chips: TPUs have a chance to give NVDA GPUs a run for their money in 2025. Google also builds its own data centers, trains its own models and has a very strong internal research team. Unlike Microsoft and Amazon, which have partnered with OpenAI and Anthropic, respectively, Google is going for the prize by owning every part of the value chain.

- OpenAI – Brand: We’ve seen a handful of surveys on unaided awareness of ChatGPT vs. Claude vs. Gemini, and it’s not close. OpenAI has the strongest brand in AI, bar none. This has resulted in the strongest revenue engine among the big AI players, with OpenAI reportedly north of $3.6B in revenue. If success in AI ends up hinging on consumer mindshare and enterprise distribution, OpenAI may continue to widen the gap between itself and rivals.

- Anthropic – Talent: 2024 saw a big exodus of research talent from OpenAI, paired with inflows to Anthropic. With Jon Schulman, Durk Kingma and Jan Leike all leaving OpenAI for Anthropic in 2024, Anthropic has been gaining mindshare with research talent. The company also made some big executive hires, adding Instagram co-founder Mike Kreiger as Chief Product Officer. With GPT-3 inventor Dario Amodei at the helm, Anthropic has carved out its position as a favored destination for AI scientists.

- xAI – Data Center Construction: We wrote in our piece on “Steel, Servers, and Power” about the importance of data center construction to the next phase of the AI race. With xAI bringing on their 100k GPU Colossus cluster in record time, the company is now the pace setter for data center scaling. The next milestone for xAI and its rivals will be a 200k cluster and then a 300k cluster. If it ends up being true that “scale is all you need,” xAI is well-positioned to continue its rapid ascent.

- Meta – Open Source: Meta, which already has strong distribution advantages through Instagram, WhatsApp and Facebook, has chosen to go all-in on open source. Meta is the only big player in the pack taking this approach. Meta’s Llama models have ardent fans and the closed-source vs. open-source debate continues to rage on. If frontier advancements start to slow down, Meta will be well-positioned to leverage its open-source models for rapid dissemination of these capabilities.

In the big model race, rigorous execution lies ahead. The competitive landscape and posture of each of the players has solidified. In 2025, we will see which of these strategies prove prescient—and which prove ill-fated.

2. AI Search is emerging as a killer app—in 2025, it will proliferate

Ever since ChatGPT came out, we’ve all been on the hunt for AI’s killer use case. What persistent new user behaviors will stand the test of time?

In 2024, many different applications were tested from AI girlfriends to AI rental assistants to voice agents and AI accountants.

One use case that we think will proliferate in 2025 is AI as a search engine. Perplexity has been on a tear since its launch, reaching 10M monthly active users. OpenAI launched ChatGPT Search in October, an expansion of its existing search-like capabilities. The Wall Street Journal ran a piece recently headlined “Googling is for Old People.” Ironically, this challenge to Google comes just as the company is mired in anti-trust litigation.

AI search is a powerful re-invention of a technology that rapidly became the internet’s killer app. Internet search is a navigational technology based on indexing the web. AI search is an informational technology based on LLMs that can read and semantically understand knowledge. For white collar workers, this will be a huge boon.

AI search may fragment what is currently a monolithic market. It’s possible to imagine a world where every profession has its own specialized AI search engine—analysts and investors now default to Perplexity, lawyers will use platforms like Harvey, and doctors will use solutions like OpenEvidence. Along these lines, one can think of Midjourney as search over the “pixelverse,” Github Copilot as search over the “codeverse,” and Glean as search over the “documentverse.” Unlike in traditional search, AI search can go much deeper semantically, and is therefore an order of magnitude more powerful, resulting in significant incremental productivity gains.

The text response as a product surface area is deeper than first meets the eye. Not all text responses are created equal. We think LLMs allow for real product differentiation across multiple dimensions, and that founders will build unique product experiences around these capabilities, targeted at specific customer audiences:

- Intent Extraction: Tightly matching the response to the user’s intent becomes easier with domain specialization. For example, a doctor and patient asking the same question will want to see different types of responses.

- Proprietary Data: Unique datasets like case law for lawyers, financial data for analysts or weather data for insurance underwriters will be important in white collar domains. Getting the answer right is table stakes in a business context.

- Formatting: How results are presented to the user, for example how verbose or concise the response is, use of bullets, use of multi-modal content, reference to sources. Accountants tend to digest information differently than journalists, for example.

- Interface Design: Code search needs to live in the IDE. Accounting policy search needs to live in your accounting SaaS platform. Semantic search benefits from context around the user’s existing workflow and data. Different domains will require different interfaces.

New domain-specific AI search engines will map themselves as tightly as possible onto the “theory of mind” of their target personas. Doctors, lawyers and accountants don’t think alike. As we become experts in a given domain, our patterns for extracting knowledge and making decisions begin to diverge. Doctors confront medical literature, lawyers confront case law, investors confront earnings reports. The way we dissect, analyze and make decisions on this knowledge is different in each domain.

There is likely to be a bifurcation between consumer and enterprise. In our capacity as consumers, we all have roughly the same needs, hence the smashing product-market fit of ChatGPT. However, in our capacity as professionals, we have different needs. It is fairly straightforward to imagine that every knowledge worker will have at least two AI search engines they use daily—one for work and one for everything else.

3. ROI will remain problematic and CapEx will begin to stabilize in 2025

We’ve written about AI’s $200B question and AI’s $600B question, which explore the tremendous capital expenditure coming out of the Big Tech companies, and the lack of commensurate end-user revenue to derive a payback for these cash outlays.

Going into 2024, the Big Tech companies were nervous about AI being a threat to their oligopoly in the cloud business. As we wrote in the “Game Theory of AI CapEx,” these companies felt they had no choice but to spend aggressively to ensure their continued dominance in an AI future. If they didn’t spend, others would, and they’d fall behind.

Entering 2025, the picture has changed dramatically. Big Tech companies have their arms locked firmly around the AI revolution. Not only do they control the vast majority of the data centers that power AI, but they own significant equity stakes in the big model companies, and they are among the largest backers of new AI startups.

With Big Tech feeling more confident, we think 2025 will be a stabilization year for AI CapEx. If 2024 was a scramble to sign deals for land and power, 2025 will be an execution year. Shovels are in the ground, and these companies will be focused on completing their new projects on-time and on-budget. They will then need to sell this installed capacity to customers and work with enterprises to help them achieve success with their new AI capabilities.

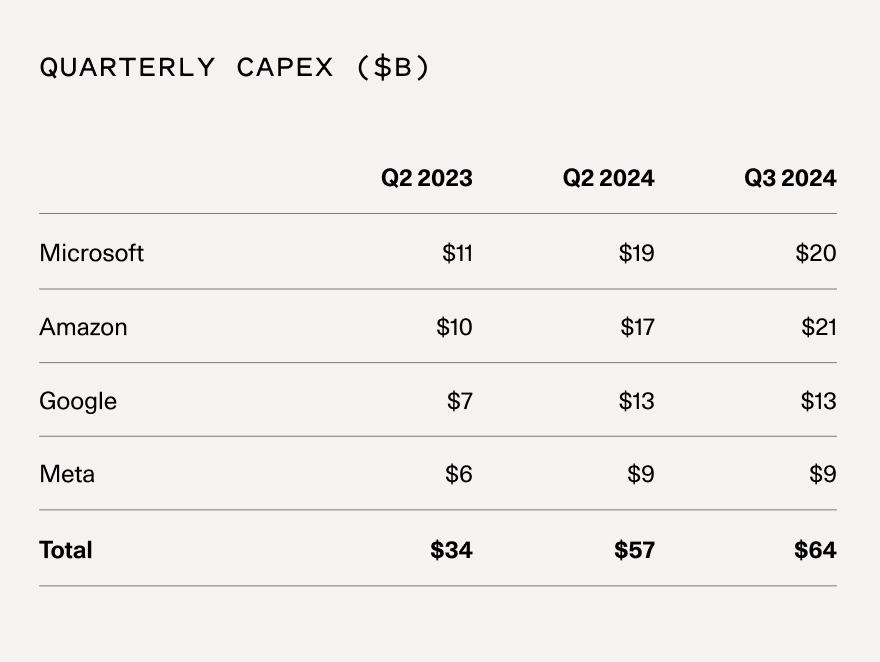

After roughly doubling CapEx levels since pre-ChatGPT, we may see some normalization in 2025. The latest CapEx figures released in Q3 suggest that the trendline is already starting to stabilize inside of Microsoft and Google. Amazon and Meta are still ramping, but may reach steady state in early 2025. (While Meta looks flat in the chart below, the company has issued guidance for increased CapEx in Q4).

Oligopolistic dynamics are likely to set-in as well. Each of the Big Tech companies follows their rivals closely. If it looks like the industry is on a glide path to a “new normal,” that may be welcome news for all. It would provide further support for a new equilibrium in 2025 vs. continued ratcheting of spend.

As new data center capacity comes online in 2025, AI compute prices should continue their epic decline. This is great news for startups and should incentivize net-new innovation. As we’ve pointed out in the past, startups are primarily consumers of compute vs. producers of compute, and so they benefit from overbuilding. The Big Tech companies are effectively creating a subsidy that will accrue to the entire AI ecosystem.

Many comparisons have been made between the Clouds and the railroad oligopoly of the Gilded Age. If data centers are indeed the rails of the digital economy, then the new AI rails will be securely in place by the end of 2025. The question remains what freight will ride on those rails, and how we can leverage this new technology to create value for customers and end-users.

Here’s to a year of leveraging AI’s building blocks to create incredible new capabilities that will change people’s lives.

If you are building a visionary company in AI, I’d love to hear from you. Please reach out at dcahn@sequoiacap.com